Product Update - Online Evaluation

This month we have three exciting updates to share with you!

Online Evaluation

The online evaluation feature has been revamped:

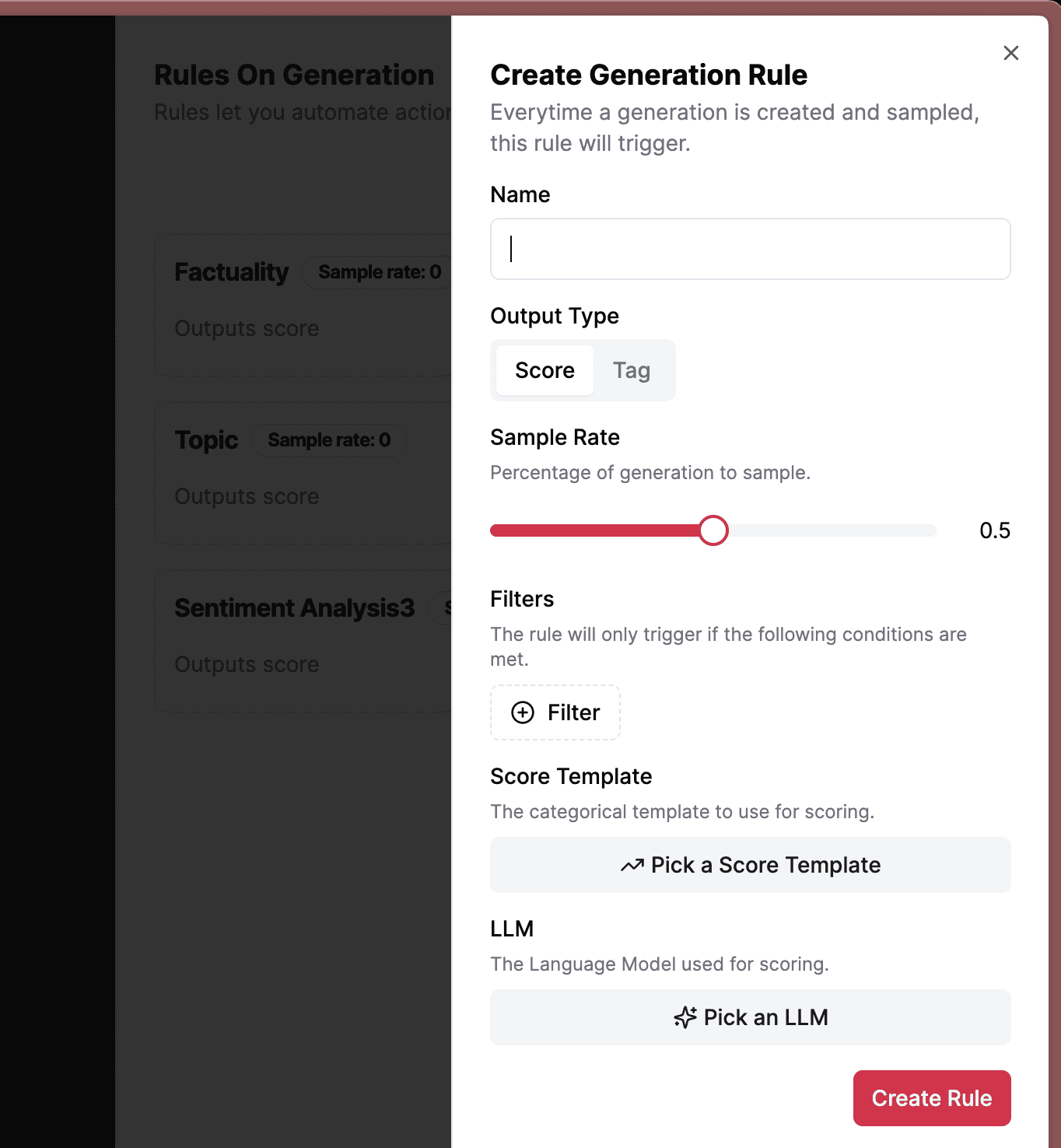

The flow to create an online evaluation rule have been reworked.

You can now run online evaluation on runs (agents, chains, workflows), in addition to generations.

Online evaluation automation rules now supports tag creation in addition to score creation.

Rule params are now editable.

Roles

There are now three roles: Admin, AI Engineer and Domain Expert. We plan to add more based on your feedback.

Improvements

For user feedback or annotations, we now track the user who created the score.

The dashboard was overall improved. There is an additional plot where you can track the usage per agent or chain type.

There is now a default project when onboarding to Literal AI.

Improved SDK: New version of the TypeScript SDK.

New integration with Mistral AI.

Bulk actions to create datasets from generations.

Miscellaneous

We had to change the data model and perform a migration about how we store steps. This unlocks simple step management and better user experience and developer experience.

Better UX for multi-modal input and outputs.

Check out the full release notes: https://docs.getliteral.ai/more/release-notes

Try it here!